As a part of my idea of Yandex Disk as ActiveStorage service implementation, I decided to implement PROPPATCH method into yandex disk gem.

As described in webdav documentation - all what we need - make a special PROPPATCH request with PATH of our folder or file.

We need to set proper option in development.rb:

config.active_storage.service = :yandex_diskWhen we need to add special section ino storage.yml file:

yandex_disk:

service: YandexDisk

access_token: "XXXXXXXXXXXXXXXXXXXXXXXX"Let’s create /lib/active_storage/service/yandex_disk_service.rb. We need to inherit our new service from Service

require "yandex/disk"

module ActiveStorage

# Wraps the Yandex Disk Service as an Active Storage service.

# See ActiveStorage::Service for the generic API documentation that applies to all services.

class Service::YandexDiskService < Service

attr_reader :client

def initialize(access_token:)

@client = Yandex::Disk::Client.new(access_token: access_token)

end

def upload(key, io, checksum: nil)

instrument :upload, key: key, checksum: checksum do

begin

# pseudocode - we can specify here local path to file, or read IO object

@client.put(LOCAL_FILE_PATH, DESTINATION_FILE_PATH)

rescue

raise ActiveStorage::IntegrityError

end

end

end

end

end Of course, it’s not a final implementation, we should implement another methods, as described here: current active_storage services.

For example azure_storage_service.rb:

# frozen_string_literal: true

require "active_support/core_ext/numeric/bytes"

require "azure/storage"

require "azure/storage/core/auth/shared_access_signature"

module ActiveStorage

# Wraps the Microsoft Azure Storage Blob Service as an Active Storage service.

# See ActiveStorage::Service for the generic API documentation that applies to all services.

class Service::AzureStorageService < Service

attr_reader :client, :path, :blobs, :container, :signer

def initialize(path:, storage_account_name:, storage_access_key:, container:)

@client = Azure::Storage::Client.create(storage_account_name: storage_account_name, storage_access_key: storage_access_key)

@signer = Azure::Storage::Core::Auth::SharedAccessSignature.new(storage_account_name, storage_access_key)

@blobs = client.blob_client

@container = container

@path = path

end

def upload(key, io, checksum: nil)

instrument :upload, key: key, checksum: checksum do

begin

blobs.create_block_blob(container, key, io, content_md5: checksum)

rescue Azure::Core::Http::HTTPError

raise ActiveStorage::IntegrityError

end

end

end

def download(key, &block)

if block_given?

instrument :streaming_download, key: key do

stream(key, &block)

end

else

instrument :download, key: key do

_, io = blobs.get_blob(container, key)

io.force_encoding(Encoding::BINARY)

end

end

end

def delete(key)

instrument :delete, key: key do

begin

blobs.delete_blob(container, key)

rescue Azure::Core::Http::HTTPError

# Ignore files already deleted

end

end

end

def delete_prefixed(prefix)

instrument :delete_prefixed, prefix: prefix do

marker = nil

loop do

results = blobs.list_blobs(container, prefix: prefix, marker: marker)

results.each do |blob|

blobs.delete_blob(container, blob.name)

end

break unless marker = results.continuation_token.presence

end

end

end

def exist?(key)

instrument :exist, key: key do |payload|

answer = blob_for(key).present?

payload[:exist] = answer

answer

end

end

def url(key, expires_in:, filename:, disposition:, content_type:)

instrument :url, key: key do |payload|

base_url = url_for(key)

generated_url = signer.signed_uri(

URI(base_url), false,

permissions: "r",

expiry: format_expiry(expires_in),

content_disposition: content_disposition_with(type: disposition, filename: filename),

content_type: content_type

).to_s

payload[:url] = generated_url

generated_url

end

end

def url_for_direct_upload(key, expires_in:, content_type:, content_length:, checksum:)

instrument :url, key: key do |payload|

base_url = url_for(key)

generated_url = signer.signed_uri(URI(base_url), false, permissions: "rw",

expiry: format_expiry(expires_in)).to_s

payload[:url] = generated_url

generated_url

end

end

def headers_for_direct_upload(key, content_type:, checksum:, **)

{ "Content-Type" => content_type, "Content-MD5" => checksum, "x-ms-blob-type" => "BlockBlob" }

end

private

def url_for(key)

"#{path}/#{container}/#{key}"

end

def blob_for(key)

blobs.get_blob_properties(container, key)

rescue Azure::Core::Http::HTTPError

false

end

def format_expiry(expires_in)

expires_in ? Time.now.utc.advance(seconds: expires_in).iso8601 : nil

end

# Reads the object for the given key in chunks, yielding each to the block.

def stream(key)

blob = blob_for(key)

chunk_size = 5.megabytes

offset = 0

while offset < blob.properties[:content_length]

_, chunk = blobs.get_blob(container, key, start_range: offset, end_range: offset + chunk_size - 1)

yield chunk.force_encoding(Encoding::BINARY)

offset += chunk_size

end

end

end

endLet’s take a look, how is our draft implementation working with our Job model with company_logo attachment:

require 'yandex/disk'

yandex_service = ActiveStorage::Service::YandexDiskService.new(access_token: 'XXXXXXXXXXX')

job = Job.new

job.company_logo.attach(io: File.open(Rails.root.join("public", "google.png")), filename: 'google.png' , content_type: "image/png")And the output:

irb(main):009:0> job = Job.first

=> #<Job id: 1, title: "Rails developer", description: "New Jobs", email: "test@google.com", company: "Google", website: "http://google.com", salary_min: 100, salary_max: 1000, currency: "", status: nil, approved: nil, expire_at: nil, approved_at: nil, created_at: "2018-02-03 17:55:21", updated_at: "2018-02-03 22:51:40">

irb(main):010:0> job.company_logo.attach(io: File.open(Rails.root.join("public", "google.png")), filename: 'google.png' , content_type: "image/png")

YandexDisk Storage (1099.5ms) Uploaded file to key: SSkvsod6Vme8Q4g3qPu7sicm (checksum: Es4hFkEdVEWDUDrSm6qrhw==)

(0.3ms) BEGIN

ActiveStorage::Blob Create (18.6ms) INSERT INTO "active_storage_blobs" ("key", "filename", "content_type", "metadata", "byte_size", "checksum", "created_at") VALUES ($1, $2, $3, $4, $5, $6, $7) RETURNING "id" [["key", "SSkvsod6Vme8Q4g3qPu7sicm"], ["filename", "google.png"], ["content_type", "image/png"], ["metadata", "{\"identified\":true}"], ["byte_size", 13774], ["checksum", "Es4hFkEdVEWDUDrSm6qrhw=="], ["created_at", "2018-02-03 22:54:29.809896"]]

(5.9ms) COMMIT

(0.2ms) BEGIN

ActiveStorage::Attachment Create (6.2ms) INSERT INTO "active_storage_attachments" ("name", "record_type", "record_id", "blob_id", "created_at") VALUES ($1, $2, $3, $4, $5) RETURNING "id" [["name", "company_logo"], ["record_type", "Job"], ["record_id", 1], ["blob_id", 6], ["created_at", "2018-02-03 22:54:29.850081"]]

Job Update All (0.4ms) UPDATE "jobs" SET "updated_at" = '2018-02-03 22:54:29.857617' WHERE "jobs"."id" = $1 [["id", 1]]

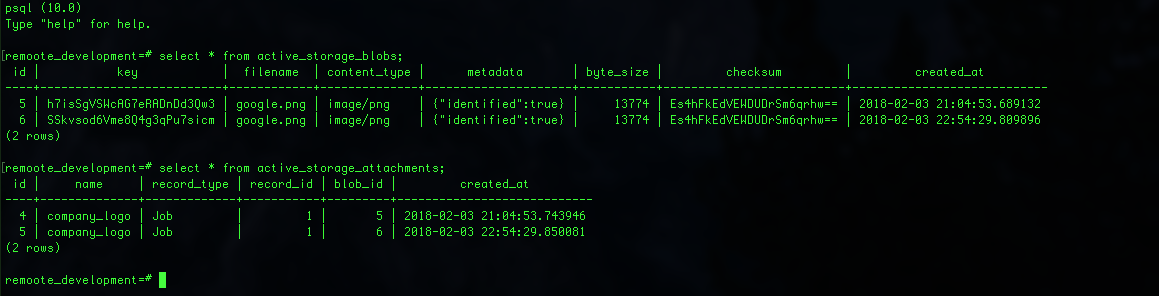

(6.1ms) COMMITIf you do a simple select on ActiveStorage tables, you can see our uploaded image information.

Of course, it’s not a final implementation - it’s just a draft, but I’ll continue work when I have more free time, and I hope you understood main idea.